1. Computational imaging with camera arrays

Camera array-based imaging uses a large number of inexpensive cameras to create virtual cameras that outperform real ones. They can function in many ways by changing the arrangement and aiming of the cameras. We developed an unstructured gigapixel array camera (UnstructuredCam), beyond the resolution of a single camera and human visual perception, which aims to capture the large-scale dynamic scene with both wide-FoV and high-resolution. The technology was successfully applied in the 2022 Beijing Winter Olympics and, as one of its key innovations, received the 2021 Beijing Municipal Science and Technology Award for Technological Innovation (2021年度北京市科学技术奖励技术发明一等奖). For more details, please visit the Project page.

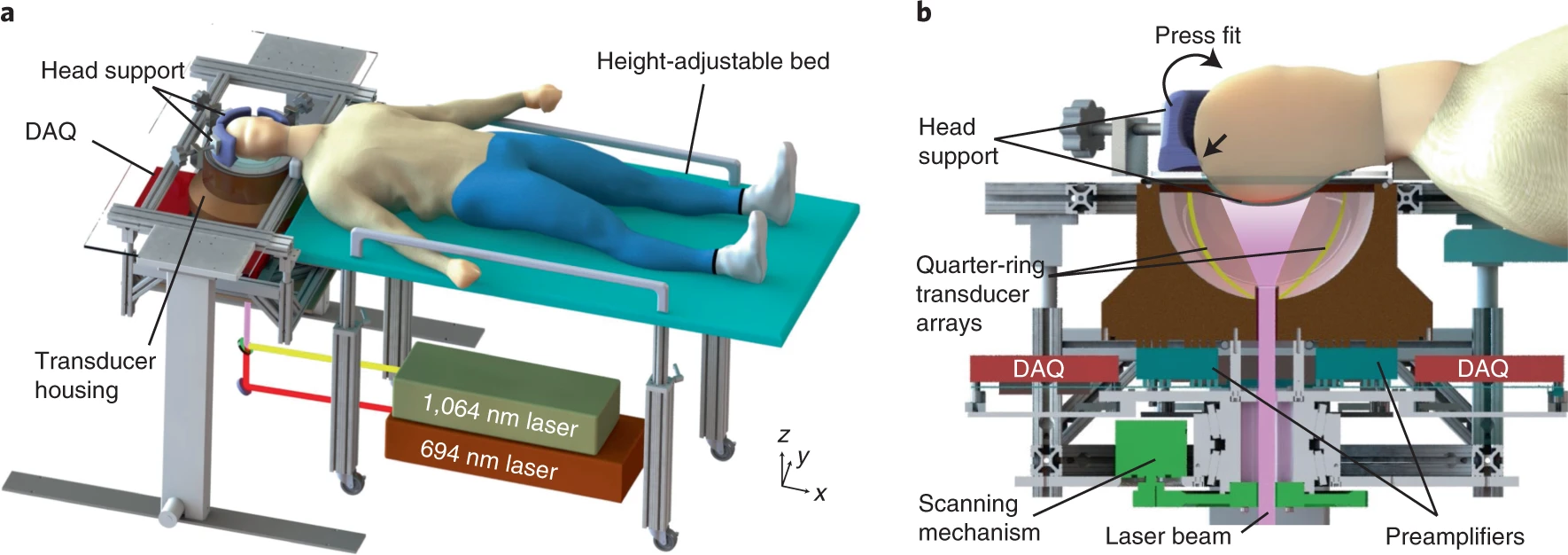

2. Photoacoustic tomography for human brain

Functional photoacoustic computed tomography (fPACT) is instead label-free, and it allows for real-time imaging at high resolution. In photoacoustic imaging, light irradiated by pulsed lasers into tissue generates heat, which causes the local thermoelastic expansion of the tissue. This causes transient changes in pressure that can be detected by conventional ultrasonic transducers. fPACT can map neural activity in the brain by imaging oxygen saturation in blood (owing to the fact that activated neurons consume more oxygen, and that oxyhaemoglobin and deoxyhaemoglobin have different absorption coefficients) and by discriminating venous blood from arterial blood. We report an implementation of fPACT that allows for the real-time functional imaging of the adult human brain at resolutions of 350 μm and 2 s. This system received the Best Paper Award at SPIE Photonics West 2021. For more details, please visit the Project page.

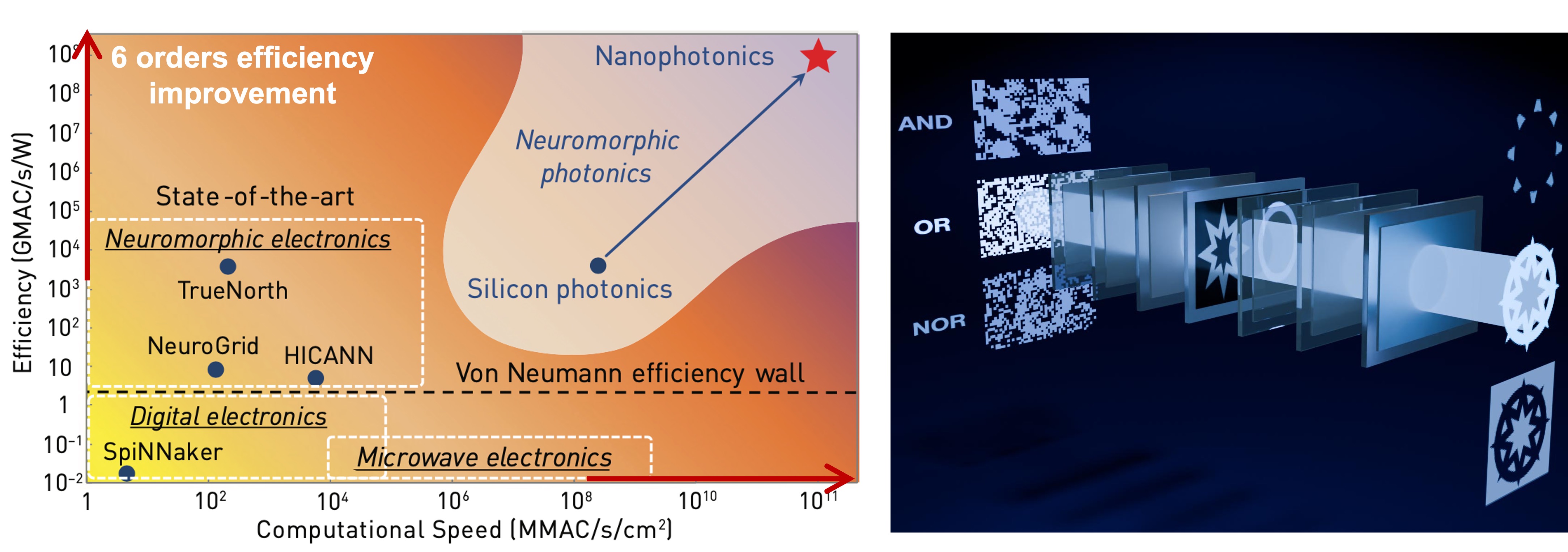

3. Optical computing

Light possesses the fastest propagation speed in physical space and offers multidimensional advantages (time, space, spectrum, etc.), making optical computing one of the ideal paradigms for building next-generation high-performance computing systems. Benefiting from the disruptive advantages of optical computing—high bandwidth, high parallelism, and low power consumption—optical computing theoretically promises to improve energy efficiency and computational speed compared to electronic computing. However, existing optical computing still faces challenges such as insufficient computational power, difficulties in dynamic computation, and low training efficiency. To address these issues, we propose Monet (multi-channel optical neural networks) for advanced machine vision tasks, DANTE (DuAl-Neuron opTical-artificial lEarning) for large-scale optical neural network training, and a photonic neuromorphic architecture for lifelong learning. For more details, please visit the Project page.

4. AI + Optics

AI + optics is an interdisciplinary frontier that leverages artificial intelligence to revolutionize optical systems and technologies. By integrating AI algorithms with optical devices, this field aims to enhance image acquisition, processing, and interpretation. For example, AI-powered optimization can improve the design and performance of optical lenses and filters, enabling more efficient, powerful, and compact systems. In imaging applications, AI can reconstruct high-quality images from limited or noisy data. Furthermore, combining AI with optical computing opens pathways for ultra-fast data processing, leveraging the speed and parallelism inherent in optical imaging systems.

5.Computational imaging + Large AI models

The integration of computational imaging with large AI models represents a transformative approach to visual data acquisition and interpretation. By combining computational imaging’s physical modeling with the semantic understanding of large vision models—AI systems pre-trained on extensive datasets—it becomes possible to extract richer insights and achieve advanced capabilities. This synergy enables applications such as zero-shot image and 3D reconstruction, cross-modal visual analysis, and more. This synergy will not only bridge the gap between raw sensor data and high-level understanding but also open new opportunities for innovation in imaging systems, particularly in challenging environments where traditional techniques fall short.

6.Computational imaging + Embodied AI

The key difference between conventional computational imaging and computational imaging integrated with embodied AI lies in interaction and adaptability. Conventional computational imaging focuses on static tasks like reconstructing or enhancing images, often as a passive observer. In contrast, computational imaging with embodied AI enables dynamic, task-driven adaptation, integrating real-time feedback to guide physical actions such as navigation or object manipulation. This synergy combines advanced imaging with contextual understanding, allowing systems to perceive and interact with their environment, making decisions based on evolving visual and task-related inputs.